A Brief Evolution of the Scientific Evidence Base, and the Birth of EBP

In the very beginnings of patient care, scientific research-based diagnoses and treatments were nonexistent. We relied on the experience, observations, whims, and biases of the practitioner. The patient population was essentially relegated to seeking out the most heralded practitioners, based on word of mouth (or effective self-marketing). The more desperate the condition, the more open-minded people tend to become about possible treatments. Without a formal scientific evidence base for practicing medicine, all kinds of wild claims and ineffective (or dangerous) practices pervaded the clinical landscape. There was no repository of peer reviewed scientific publications, let alone one that was easily accessible to practitioners and the public.

In 1960, the National Library of Medicine established a journal citation database called MEDLINE. In 1996 PubMed was established, and essentially functioned as the parent database of MEDLINE, enabling the public to freely search this database (for studies & abstracts) by 1997. In 2000, PubMed Central was established as a database/repository entirely consisting of scientific articles in free full-text. In summary, it’s quite stunning to think that what we know as the formal body of peer reviewed/scientific literature (or what the scientific community colloquially calls, “the literature”) has been established only within roughly the past 64 years. And just think, it’s only been a quarter-century that the general public outside of educational institutions has had free/open access to the literature.

Now that we have amassed a substantial, and continually growing body of scientific literature, it makes sense to use it as a starting point of investigation when attempting to solve problems and reach goals related to human physiology. The term evidence-based fitness (EBF) is a spinoff of the term evidence-based practice (EBP), which has its origins in the term evidence-based medicine (EBM). Back in 1996, Sackett defined EBM as the integration of clinical expertise with the best external evidence [1]. A decade later, the American Psychological Association [2] broadened and nuanced the definition by defining EBP as, “the integration of the best available research with clinical expertise in the context of patient characteristics, culture and preferences.” So, we need to keep in mind that the scientific literature is not the end-all, or the infallible oracle of truth.

The term scientism has been used to denote an over-reliance on, or uncritical trust in science (and published research) to answer all of our burning questions. EBP aims to increase objectivity by avoiding the extremes of solely relying on scientific findings, or solely relying on the observations and anecdotes of highly accomplished clinicians, coaches, or athletes. While science – as a set of guiding principles – might actually be perfect, research (the vehicle to carry out scientific principles) is inevitably imperfect since it’s a human endeavor. And of course, humans commit errors. Despite scientific research being our best tool for understanding the natural world, published research is ripe for criticism, and it’s important to understand its strengths and limitations.

Overview & Critique of the Two Major Types of Scientific Research

A 10,000-foot aerial view of research is that it can be divided into two major types: experimental research and observational research. The exemplar of experimental research is the randomized controlled trial (RCT), often referred to as the “gold standard” type of research, since its design best enables us to determine causal relationships between the variables we’re examining. However, in spite of this strength, typical weaknesses of RCTs are small numbers of participants, short trial durations, and a lack of practical or clinical relevance (due to insufficient or excessive dosing, as well as protocols that do not reflect real-world practice). The latter shortcoming is also called a lack of external validity. So, the most tightly controlled experiment (with high internal validity) can still fall short of real-world relevance.

Observational research (which encompasses epidemiology) has the advantage of examining or tracking large populations over long periods of time, enabling the study of disease development and death (mortality). The most rigorous type of observational research is the prospective cohort design. The main weakness of observational research is its lack of control of potentially confounding variables, and its inherent difficulty in establishing causal relationships. The cliche that correlation does not automatically equal causation comes from false associations drawn from observational data. It’s specifically the latter quality that puts observational research below experimental research in the traditional hierarchy of evidence (I’m sure most of you have seen some variant of the evidence pyramid). You’ll invariably see researchers specializing in RCTs agree with them being the “gold standard,” while epidemiologists will vehemently contest this opinion, and a provide case for observational research being capable of establishing causation, based on fulfilling what’s known as the Bradford Hill criteria [3].

I’ve actually seen bros on social media completely dismiss nutritional epidemiology, but that in itself is a bias to be avoided. Unsurprisingly, people will tend to dismiss or deify research based upon whether or not it aligns with their beliefs (regardless of the research type). The reality is that we need both types of research to gain a more complete understanding of how the body works. Each type has its strengths and limitations; each picks up where the other falls short. Yes, observational research lacks the control measures and internal validity of experimental research. And yes, experimental research often lacks the external validity and applicability to real-world scenarios outside of the sterile conditions of the lab. However, when there is a consistent convergence of evidence from experimental and observational studies (when both types of research consistently generate similar answers to a given research question), we can be confident that we’ve come closer to the truth. Summational research such as systematic reviews, meta-analyses, and narrative reviews serve to synthesize and make sense of the sea of research findings on a given topic.

The Other Component of EBP: Field Observations and Coaching Experience

Hopefully the previous section gave a sufficient taste of the necessity as well as the fallibility of scientific research. The other major component of EBP are field observations (and yes, anecdotes) of practitioners, and even the trainees/athletes themselves – who in some cases have mastered their own domain. There is valuable knowledge to be learned from not just the ivory tower of academia, but also the trenches. Some might argue that wisdom from the field is just as valuable, and often even more so, since it by definition deals with practice rather than merely theory. The validity of field observations is strengthened when there is a consistency of reports across several practitioners (preferably without vested interests in the given protocol or agent). The strength of these observations can also be influenced by the level of experience, education, accomplishments, reasoning capability, and science literacy of the person relaying or prescribing the agent/protocol. Unfortunately, there are plenty of coaches at professional levels who still fall for pseudoscience due to their own cognitive biases or personal ideologies.

Despite these limitations, we need to rely on field observations to compensate for the shortcomings of academic research. There is a vast expanse of unknown/gray area that scientific research has not covered. Since the scientific research publication process is very slow (research can only examine one facet of a given topic at a time), it’s common for research findings to lag decades behind what coaches and practitioners implement successfully in the field. A prime example of this is the “science-based” recommended dietary allowance (RDA) for protein being 0.8 g/kg, when a daily protein intake of about double this amount is more effective for clinical, anthropomorphic, and athletic applications [4,5]

Summing It Up

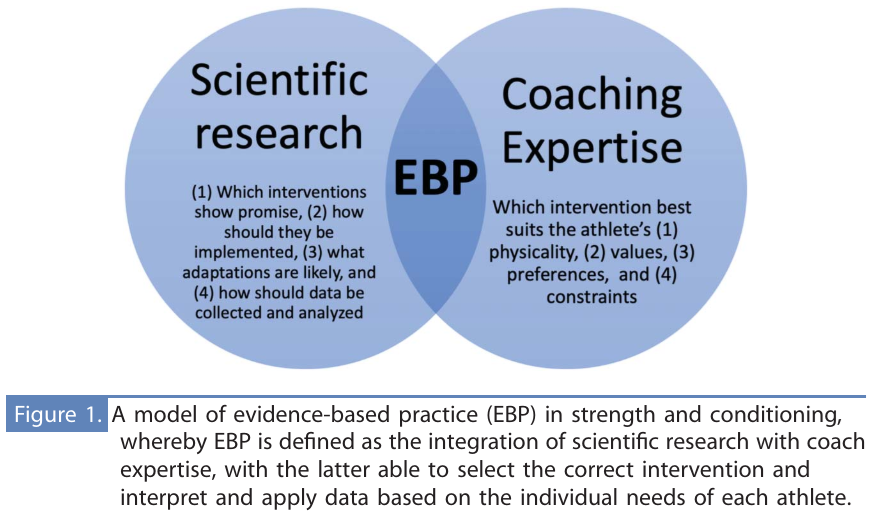

Contrary to the impression left by internet debates, being “evidence-based” does not mean having the ability to cite a study supporting or refuting a given claim. EBP is rooted in the understanding that science (peer reviewed research) and practice (field experience) must both be considered in order to push knowledge forward while achieving the best results for patients, clients, and trainees. Since a picture is worth a thousand words, I will save you from further reading with the following diagram that elegantly captures the interlocking components of EBP – credit to Dr. Anthony Turner (Professor in Sports & Exercise Science at London Sport Institute) [6]:

_________________________________________________

Alan Aragon is the Chief Science Officer of the Nutritional Coaching Institute. With over 30 years of success in the field, he is known as one of the most influential figures in the fitness industry’s movement towards evidence-based information. Alan has numerous peer reviewed publications that continue to shape the practice guidelines of professionals, and the results of their clientele. He is the founder and Editor-In-Chief of Alan Aragon’s Research Review (AARR), the original and longest-running research review publication in the fitness industry.

Alan Aragon is the Chief Science Officer of the Nutritional Coaching Institute. With over 30 years of success in the field, he is known as one of the most influential figures in the fitness industry’s movement towards evidence-based information. Alan has numerous peer reviewed publications that continue to shape the practice guidelines of professionals, and the results of their clientele. He is the founder and Editor-In-Chief of Alan Aragon’s Research Review (AARR), the original and longest-running research review publication in the fitness industry.

References

- Sackett DL, Rosenberg WM, Gray JA, Haynes RB, Richardson WS. Evidence based medicine: what it is and what it isn’t. BMJ. 1996 Jan 13;312(7023):71-2.

- Lukić P, Žeželj I. Delineating between scientism and science enthusiasm: Challenges in measuring scientism and the development of novel scale. Public Underst Sci. 2023 Dec 31:9636625231217900.

- Shimonovich M, Pearce A, Thomson H, Keyes K, Katikireddi SV. Assessing causality in epidemiology: revisiting Bradford Hill to incorporate developments in causal thinking. Eur J Epidemiol. 2021 Sep;36(9):873-887.

- Jäger R, Kerksick CM, Campbell BI, Cribb PJ, Wells SD, Skwiat TM, Purpura M, Ziegenfuss TN, Ferrando AA, Arent SM, Smith-Ryan AE, Stout JR, Arciero PJ, Ormsbee MJ, Taylor LW, Wilborn CD, Kalman DS, Kreider RB, Willoughby DS, Hoffman JR, Krzykowski JL, Antonio J. International Society of Sports Nutrition Position Stand: protein and exercise. J Int Soc Sports Nutr. 2017 Jun 20;14:20.

- Morton RW, Murphy KT, McKellar SR, Schoenfeld BJ, Henselmans M, Helms E, Aragon AA, Devries MC, Banfield L, Krieger JW, Phillips SM. A systematic review, meta-analysis and meta-regression of the effect of protein supplementation on resistance training-induced gains in muscle mass and strength in healthy adults. Br J Sports Med. 2018 Mar;52(6):376-384.

- Turner AN. Strength and Conditioning Journal, April 2, 2024. | DOI: 10.1519/SSC.0000000000000840.